Preface

SovScaDesDisMaLOps := Sovereign Scalable Data Engineering Sciences and Distributed Machine Learning Operations

SovScaDesDisMaLOps Workshop for the Wallenberg AI, Autonomous Systems and Software Program (WASP-AI) Community Building Summer School in 2025.

This book contains learning material, divided up into slide decks. Each deck constitute a lesson. Several lessons combine to form a module, and a series of modules constitute a learning workshop.

This is the book version of the material. You can also see the lessons as slide decks and as pure markdown here:

Credits

The development of this course is supported by the Wallenberg AI, Autonomous Systems and Software Program funded by Knut and Alice Wallenberg Foundation.

The course materials are open sourced as a contribution to the community of learners. The ideas and concepts as well as working codes will be made available here for self-study and group-study for anyone who is interested in SovScaDesDisMaLOps.

License

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License. We encourage the use of this material, under the terms of the above license, in the production and/or delivery of commercial or open-source learning programmes.

Copyright (c) VakeWorks, 2025

Definition

Definition of SovScaDesDisMaLOps

-

SovScaDesDisMaLOps := Sovereign Scalable Data Engineering Sciences and Distributed Machine Learning Operations

-

DesDisMaL := Data Engineering Sciences and Distributed Machine Learning

- matematics + statistics + computing + micro-economics + domain-expertise

-

SovSca_Ops := Sovereign and Scalable (Business and Digital) Operations

- law + entrepreneurship + operations

- sovereign = being independent and free from the control of another

- scalable = being able to grown on demand

SovScaDesDisMaLOps

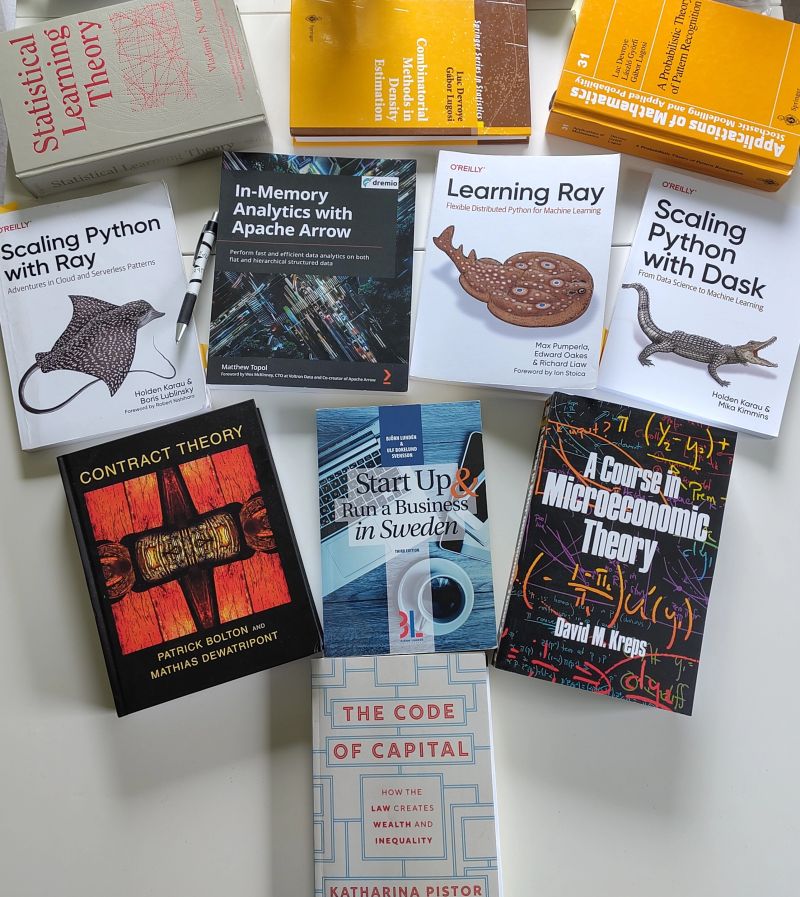

Reference Books

See LI post for context.

Overview

Theoretical Modules -- Overview

- [1 hour] Laws of Capitalism, Intellectual Property Laws in Sweden, Statistical Contract Theory, Machines, Models

- [1 hour] Machines, Models, Abstract Machine Models, Work-Depth Model, Brent's Theorem, Digital Sovereignty, AoAaO

- [30 minutes] Industry Guest Talk about Sovereign (Scalable) Operations by Jim Dowling, CEO of Logical Clocks AB, Stockholm, Sweden

Practical Modules -- Overview

- [1 hour] docker, command-line, git, ray labs

- [1 hour] ray labs with YouTrys

- [last 30 minutes] summer school survey and workshop summary & discussions

Laws of Capitalism

Quick Tour of Laws of Capitalism

- Katharina Pistor breaks down the history, process, institutions, and participants involved in the legal coding of capital.

- She shows us how private actors have harnessed social resources to accumulate wealth, generating not only economic inequality, but inequality in law.

- Enabling them to opt out of jurisdictions, restrict governmental policy, and erode democracy.

- The laws of capitalism have elevated the interests of the few above that of the many, but we can rewrite the code and restore balance to society.

Coding Land & Ideas

Legal "coding" of capital

- how the law selectively "codes" certain assets, endowing them with the capacity to protect and produce private wealth

- how land became legally coded as property during the enclosure movement in England

- attempts to code traditional indigenous land use rights in Belize.

- explains how even ideas, which are not natural property, can be made an entire new class of property according to the law

- institutionalization of copyright and patent law

- coding intellectual property; eg. patent human genes

Coding Debt

How & why law codes debt

- how the law has accompanied the evolution of negotiable debt instruments, from the

- simple IOU, to

- bills of exchange in Medieval Genoa, to

- securitized assets in the modern day and on to derivatives

- examines the securitization of mortgage debt in the modern United States in the lead-up to the financial crisis of 2008

- the legal code has been essential in the transformation of humble IOUs

- into elaborate financial contracts, and

- the creation of an entire finance industry

Lawyers, Firms & Corporate Entities

Corporations to create wealth 1600s+

- how the law has accompanied the evolution of private business enterprises,

- from their early roots as simple partnership agreements of temporary duration,

- to colonial joint-stock companies in the 1600's with state charters granting them the legal status of corporations (or legal persons),

- and the eventual introduction of general incorporation statutes in the 1800's simplifying the creation of corporations.

Corporations to create wealth today

- by the 1900's the law enabled the corporation to become the dominant form of business organization under capitalism

- case study of Lehman Brothers: how its organization as a holding company allowed shareholders to profit, while leaving debt-strapped subsidiaries to flounder.

- Lehman harnessed the corporate structure created by law to build a house of cards.

The Tool Kit

Connecting legal codes to capital

- the four essential attributes of capital:

- priority,

- durability,

- universality, and

- convertibility.

- these ensure that an asset generates wealth for its owner, i.e. becomes capital

How to legally code assets as capital?

- through the legal institutions constructing certain bodies of law:

- property law,

- collateral law,

- trust law,

- corporate law,

- bankruptcy law and

- contract law.

- private owners of capital can harness the centralized means of coercion (like litigating in State courts) to make their rights effective

Legal codes to capital in action

- property rights can also be pursued in a more decentralized fashion, with parties picking the law and the forum.

- legal claims are pursued at various venues, but also where law is constructed

- law is not always handed down from above, but often emerges from below, sometimes outside the courts

- law and legal institutions are not static, but evolve and expand by an incremental process, usually driven by interested parties.

- it need not be by legislation - they can change the meaning of a law by renegotiating its interpretation and application.

The Codemaster

Private lawyers in coding capital

- Private Attorneys are the masters of the code

- Despite the fact that the State and juridical precedent establishes the law, it is private attorneys who find the flaws, loopholes, or inaccuracies in the legal code

- history of private lawyers in Prussia, France, and the American legal system

- private lawyers shape and mold the legal code over time

- vast amounts of wealth accumulates in private law firms today

- e.g. relationship between the UK’s Magic Circle of Firms and Russian Oligarchs.

A Code for the Globe

How law codes international capital?

- Laws are national, but international capital transcends borders.

- the domestic law of two hegemons:

- the United Kingdom and

- the United States

- have become the default legal systems used internationally

- globalization has been made possible by using these two dominant jurisdictions to code a global system for trans-national commerce, finance and corporations

Trans-national laws of capitalism

- individual countries may have ceded too much for international capital

- bilateral investment treaties lead to tension between democratic sovereignty and globalization

- desirable domestic regulations and protections sought by the democratic process can be hampered by the threat of expensive lawsuits from foreign investors

- freedom of capital movement across borders brings along an alien legal jurisdiction, imposing restrictions on local governments, elevating the private rights of capital above the public interest, and eroding democracy

Transforming the Code of Capital

how the code of capital is used?

- the legal system is a social resource, but it has been harnessed by private actors to create and accumulate immense private wealth.

- This has not only produced economic inequality today, but also inequality before the law.

- Private actors can opt out of jurisdictions, restrict the policy space of governments, and erode democracy.

how to reform the legal code?

- we can rewrite the code to make it fairer.

- it can be an incremental process

- including rolling back some of the privileges and exemptions acquired by capital over the years,

- setting out jurisdictional boundaries to ensure that the same rules apply to everyone.

- rules can also be adjusted to ensure those who benefit internalize their costs

conscientious citizens of democracies

- the burden need not always fall on the government

- tools and institutions can be created to allow private actors to monitor capital more effectively

- the legal code can be changed to make the law fairer and

- allow governments to regain some policy space,

- to address the needs and implement the protections desired by the people through the democratic process

- however, sovereignty of operations is a pre-requisite to allow the government of your jurisdiction to be able to act.

Any corporation can choose its bylaws!

- The Rebellious CEO: 12 Leaders Who Did It Right, Ralph Nader, Melville House, ISBN: 9781685891077, 2023.

...shows how 12 CEOs ... uniquely rejected narrow yardsticks of shareholder value by leading companies to larger models of prosperity and justice... This select group of mavericks and iconoclasts — which includes The Body Shop’s Anita Roddick, Patagonia’s Yvon Chouinard, Vanguard’s John Bogle and Busboys and Poets’ Andy Shallal —give us, Nader writes, “a sense of what might have been and what still could be if business were rigorously framed as a process that was not only about making money and selling things but improving our social and natural world.

You can be a rebellious CEO!

- Given the urgency of climate change and increasing wealth ineqalities:

- there is no need to wait for the laws of capitalism to be revised.

- We will look at considerations for starting a corporation in Sweden

- specifically at legal codes favouring PhD students in Sweden

- Sweden is ideal for innovation

- Sweden ranks 2nd among the 133 economies featured in the Global Innovation Index 2024

- The land of unicorns: How did Sweden end up with the second largest concentration of billion dollar companies per capita in the world? Professor Robin Teigland explains how a small country nestled away in the Nordics developed its magic touch.

Recommended Exercise

-

Minimal Exercise: WATCH Laws of Capitalism Playlist [140 minutes] *

The laws of capitalism have elevated the interests of the few above that of the many, but we can rewrite the code and restore balance to society. In this series, Professor Katharina Pistor (Columbia Law School) breaks down the history, process, institutions, and participants involved in the legal coding of capital. She shows us how private actors have harnessed social resources to accumulate wealth, generating not only economic inequality, but inequality in law. Enabling them to opt out of jurisdictions, restrict governmental policy, and erode democracy. Learn more at http://lawsofcapitalism.org/

- References: https://hetwebsite.net/het/video/pistor.htm

-

Optional Deeper Dives:

- READ The Code of Capital: How the Law Creates Wealth and Inequality, Katharina Pistor, Princeton University Press, 2019, ISBN: 9780691178974.

- Chapters: Empire of Law, Coding Land, Coding Legal Persons, Minting Debt, Enclosing Nature's Code, A Code for the Globe, The Masters of the Code, A New Code?, Capital Rules by Law.

- READ The Law of Capitalism and How To Transform It, Katharina Pistor, coming in September 2025.

*

A fascinating study of the legal underpinnings of capitalism, why the system must be transformed, and what we can do about it. While capitalism has been conventionally described as an economic system, it is actually a deeply entrenched legal regime. Law provides the material for coding simple objects, promises and ideas as capital assets. It also provides the means for avoiding legal constraints that societies have frequently imposed on capitalism. Often lauded for creating levels of wealth unprecedented in human history, capitalism is also largely responsible for the two greatest problems now confronting humanity: the erosion of social and political cohesion that undermines democratic self-governance on the one hand, and the threats that emanate from climate change on the other. By exploring the ways that Western legal systems empower individuals to advance their interests against society with the help of the law, Katharina Pistor reveals how capitalism is an unsustainable system designed to foster inequity. She offers ideas for rethinking how the transformation of the law and the economy can help us create a more just system—before it’s too late.

- READ The Code of Capital: How the Law Creates Wealth and Inequality, Katharina Pistor, Princeton University Press, 2019, ISBN: 9780691178974.

Intellectual Property Laws in Sweden

Professor's Privilege Law (lärarundantaget):

In 1949, a new principle was introduced in the Swedish Patent Act: “Teachers at universities, colleges or other establishments that belong to the system of education should not be treated as employees in the scope of this law” (SFS 1949:345: §1). In practice, the formulation meant that academic scholars were not subject to a new patent law which gave employers the intellectual property rights to inventions made by employees. Or in other words: a patent generated by academic research belonged to the scientist, not to the university. Today – the legislation is still in place – this exception for university scholars is known as the “professor’s privilege” or “teacher's exemption” and lärarundantaget in Swedish ...

- The Nomos of the University: Introducing the Professor's Privilege in 1940s Sweden, Pettersson I., Minerva. 2018;56(3):381-403. doi:10.1007/s11024-018-9348-2

- University entrepreneurship and professor privilege, Erika Färnstrand Damsgaard, Marie C. Thursby, Industrial and Corporate Change, Volume 22, Issue 1, February 2013, Pages 183–218, https://doi.org/10.1093/icc/dts047

Starting a Business in Sweden

Corporate Jurisdictions and Business Sovereignty

- Corporate entities in Sweden?

- Sole tradership (Enskild firma), Limited Liability Company (Aktiebolag) and others

- Structre of Aktiebolag (AB)

- Board of Directors ("responsible for operations"), List of Shareholders ("fractional owners")

- Resources:

- Bolagsverket "Swedish Ministry of Corporate Affairs"

Statistical Contract Theory

Basics of the Principal-Agent Model under Information Asymmetry.

- Main Assumptions

- An agent and a principal enter into a contract with their own self-interests

- Coercive powers of a State exist (Courts can enforce contracts)

- There is information asymmetry:

- agent won't share crucial private information with principal or vice versa

- It's a take-it-or-leave-it contract from principal to agent

- Example 1: Airline industry

- Principal is airline; agent is passensger;

- information hidden from principal: the maximum price an agent is willing to pay per trip

- Story: how was the airline industry rescued by contract theory half a century ago?

- Example 2: Streaming Music Indusrty - United Masters

- Principal-S is a streaming service provider, eg.spotify, disney music, YouTube Music, etc.

- Agent-P is a musician producing music

- Agent-C is a listener consuming music

- Traditional contract: Agent-P <- Principal-S -> Agent-C

- United Masters as Principal-UM have a different contract model:

- Agent-P <- Principal-UM -> Agent-S, where Agent-S is a streaming provider

- Example 3: Food Industry - Radically Transparent Food Networks at vake.works

- Food reaches from producer or farmer to eater through a sequence of pairwise contracts between different types of agent-principal pairs.

- Consider charcuterie made of beef, salt and pepper, then the following pairs may exist before it reaches the eater:

- producer-processor (eg. slaughterer, butcher, packager, cold-chain provider)

- processor-transporter, transporter-distributor, distributor-exporter, exporter-importer, importer-distributor, distributor-retailer

- retailer-eater

- Deep dives into various natural living systems experiments by clicking

Play Alland pausing, playing or forwarding as needed at playlists/podcasts at:

- Reference Readings:

- Michael Jordan UC Berkeley & Statistical Contract Theory Play List

- The Theory of Incentives: The Principal-Agent Model, Jean-Jacques Laffont and David Martimort, Princeton University Press, 2002, ISBN: 9780691091846.

- Contract Theory, Patrick Bolton and Mathias Dewatripont, The MIT Press, 2014, ISBN: 9780262025768.

- A Course in Microeconomic Theory, David M.. Kreps, Princeton University Press, 2020, ISBN: 9780691202754.

Machines

Definitions in English

A Machine is a device that directs and controls energy, often in the form of movement or electricity, to produce a certain effect.

A computer is a type of machine and so is a robot, car, bicycle, drone or an autonomous system.

A computer is a machine that can be programmed to automatically carry out sequences of arithmetic or logical operations (computation). Modern digital electronic computers can perform generic sets of operations known as programs, which enable computers to perform a wide range of tasks. A computer system

- includes the hardware, operating system, software, and peripheral equipment needed and used for full operation;

- or to a group of computers that are linked and function together, such as a computer network or computer cluster,

- whose newest manifestation is cloud computing.

- Cloud computing is "a paradigm for enabling network access to a scalable and elastic pool of shareable physical or virtual resources with self-service provisioning and administration on-demand," according to ISO.

Computer Architecture

- von Neumann Architecture gives the model of a computer with a central processing unit (CPU), memory unit and I/O devices (right)

Computer Hardware

Computer hardware includes the physical parts of a computer, such as the central processing unit (CPU), random-access memory (RAM), motherboard, computer data storage, graphics card, sound card, and computer case. It includes external devices such as a monitor, mouse, keyboard, and speakers.

Central Processing Unit

A central processing unit (CPU), also called a central processor, main processor, or just processor, is the primary processor in a given computer.

Computer Memory

Computer memory stores information, such as data and programs, for immediate use in the computer.

Multi-Core Processor

A multi-core processor (MCP) is a microprocessor on a single integrated circuit (IC) with two or more separate central processing units (CPUs), called cores to emphasize their multiplicity (for example, dual-core or quad-core).

Graphics Processing Unit

A graphics processing unit (GPU) is a specialized electronic circuit designed for digital image processing... GPUs were later found to be useful for non-graphic calculations involving embarrassingly parallel problems due to their parallel structure. The ability of GPUs to rapidly perform vast numbers of calculations has led to their adoption in diverse fields including artificial intelligence (AI) where they excel at handling data-intensive and computationally demanding tasks.

Computer Software

Software consists of computer programs that instruct the execution of a computer.

Operating System

An operating system (OS) is system software that manages computer hardware and software resources, and provides common services for computer programs.

Android, iOS, and iPadOS are mobile operating systems, while Microsoft Windows, macOS, and Linux are desktop operating systems. Linux distributions are dominant in the server and supercomputing sectors.

HarmonyOS (HMOS) (Chinese: 鸿蒙; pinyin: Hóngméng; trans. "Vast Mist") is a distributed operating system developed by Huawei for smartphones, tablets, smart TVs, smart watches, personal computers and other smart devices.

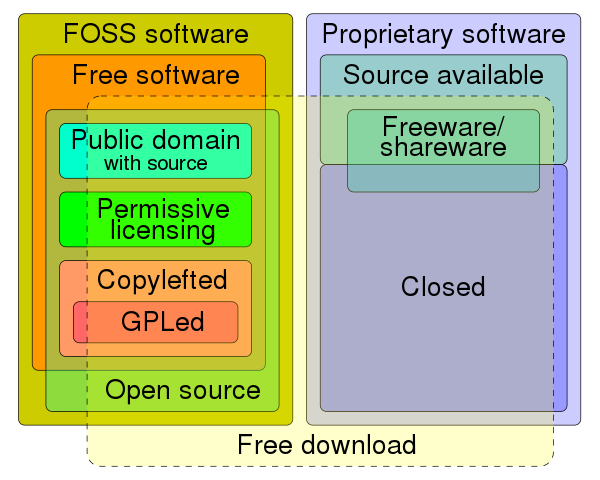

IP, Copyright & License

- Intellectual property (IP) is a category of property that includes intangible creations of the human intellect.

- A copyright is a type of intellectual property that gives its owner the exclusive legal right to copy, distribute, adapt, display, and perform a creative work, usually for a limited time.

- Software copyright is the application of copyright in law to machine-readable software.

- A software license is a legal instrument governing the use or redistribution of software.

Linux, GPL and Distros

Linux (/ˈlɪnʊks/ LIN-uuks) is a family of open source Unix-like operating systems based on the Linux kernel, an operating system kernel first released on September 17, 1991, by Linus Benedict Torvalds. Linux is typically packaged as a Linux distribution (distro), which includes the kernel and supporting system software and libraries—most of which are provided by third parties—to create a complete operating system, designed as a clone of Unix and released under the copyleft GPL license.

We will use Ubuntu and Alpine Linux distros in docker containers.

Dive Deeper: Linux

Linux distributions are dominant in the server and supercomputing sectors.

Cluster Computing

A computer cluster is a set of computers that work together so that they can be viewed as a single system.

Cloud Computing

The newest manifestation of cluster computing is cloud computing.

Service Models

Cloud Computing Vendors

Vendor Lock-In

Anatomy of an AI System

The Amazon Echo as an anatomical map of human labor, data and planetary resources, by Kate Crawford and Vladan Joler

Exercise

- Deep Dive into Amazon Echo by reading https://anatomyof.ai/ ~1h

Models

Definitions in English

An Abstract Model or merely Model is a representation of a physical object that contains an abstraction of reality.

Models are useful to analyse machines, including their architecture, performance, property and behaviour.

Abstract Machine Models

- Efficiency of an algorithm is analysed using an abstract model of computation or an abstract machine model (AMM)

- Such a model allows the designer of an algorithm to ignore many hardware details of the machine on which the algorithm will be run,

- while retaining enough details to characterize the efficiency of the algorithm,

- in terms of taking less time to complete successfully or

- requiring less resources, eg., number of processors or computers.

- while retaining enough details to characterize the efficiency of the algorithm,

Sequential Random Access Machine

The SRAM model is abstracted to consist of:

- a single processor, \(P_1\), attached to a memory module, \(M_1\),

- such that any position in memory can be accessed (read from or written to) by the processor in constant time.

- every processor operation (memory access, arithmetic and logical operations) by \(P_1\) in an algorithm requires one time step and

- all operations in an algorithm have to proceed sequentially step-by-step

Parallel Random Access Machine

PRAM is the abstract machine model for computers and smart phones with \(p\) multi-core processors for instance.

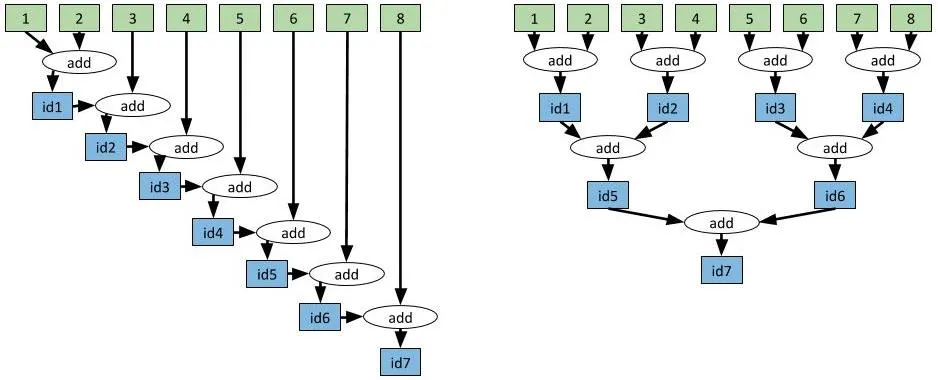

Work-Depth Model

- Work-Depth Model is a more abstract multiprocessor model

- work-depth model represents parallel algorithms by directed acyclic graph (DAG)

Brent's Theorem

With \(T_1, T_p, T_\infty\) defined in the work-depth model, and if we assume optimal scheduling, then \[ \frac{T_1}{p}\le T_p \le \frac{T_1}{p} + T_\infty. \]

- Let us go through the key parts of the first Chapter of the Distirbuted Algorithms and Optimisation Course Notes to understand Brent's Theorem carefully.

- These notes are for the theoretical parts of the 6hp WASP PhD course titled Scalable Data Science and Distributed Machine Learning (ScaDaMaLe) I will be offering in 2026 Fall where we will dive deeper into many more parallel and distributed algorithms.

Distributed Parallel Random Access Machine Model

- DPRAM and its more abstract Distributed Work-Depth Model is the abstract machine model used to analyse computations done by a cluster of computers as well as computation in the cloud.

- In addition to time and space, for p processor and memory units, we also need to analyse the cost of communication between \(c\) PRAM computers in our cluster.

- The ScaDaMaLe course will cover such algorithms in DPRAM models over a whole semester in addition to coding in distributed systems for group projects.

- This workshop is a mini-primer for the ScaDaMaLe course in Fall 2026.

Implementation in Ray

- We will use Ray to implement algorithms under DPRAM model in labs.

- Ray is an open source project for parallel and distributed Python.

# Slow approach.

values = [1, 2, 3, 4, 5, 6, 7, 8]

while len(values) > 1:

values = [add.remote(values[0], values[1])] + values[2:]

result = ray.get(values[0])

Runtime: 7.09 seconds

# Fast approach.

values = [1, 2, 3, 4, 5, 6, 7, 8]

while len(values) > 1:

values = values[2:] + [add.remote(values[0], values[1])]

result = ray.get(values[0])

Runtime: 3.04 seconds

We will dive deeper in Ray but for now let's glance at this 7 minutes long read from one of the creators of Ray.

- Modern Parallel and Distributed Python: A Quick Tutorial on Ray by Robert Nishihara

- You will dive into why the

Runtimeis different in a YouTry during the labs for ray

Digital Sovereignty

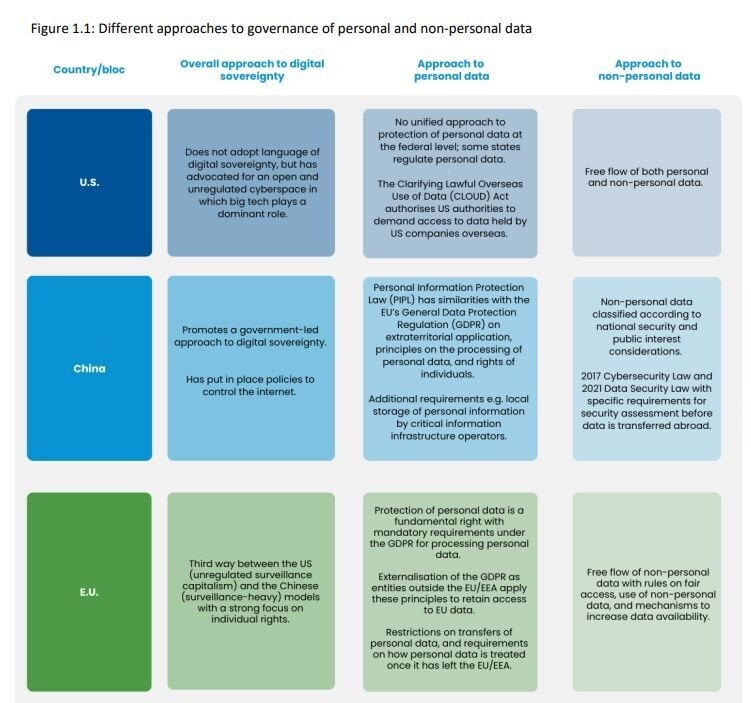

Digital Sovereignty

According to The World Economic Forum

Digital sovereignty, cyber sovereignty, technological sovereignty and data sovereignty refer to the ability to have control over your own digital destiny – the data, hardware and software that you rely on and create.

... the physical layer (infrastructure, technology), the code layer (standards, rules and design) and the data layer (ownership, flows and use).

US, Chinese and European Models

Erosion of European Trust in US Tech

Data Cut & Kill Switch Anxieties

'Kill Switch Shield' & the Recurring Erosion of Trust in US Tech 2025-07-28

In the emerging internet governance era, the focus (of government anxieties) is on data cuts: when governments and companies get cut off from the network, unable to access their information or contacts. ... Trump was threatening EU leaders with tariffs if they did not align with his Make America Great Again agenda. The risk that US sanctions could trigger loss of access to critical digital infrastructure should spark widespread concerns about the degree of US control over key information platforms, including the potential for President Trump to weaponize the private US corporations that governments, such as the EU, rely upon.

Case Study: Stargate—The Crown Jewel of Panic Lock-In

OpenAI’s Stargate initiative is the ultimate expression of the new digital empire.

- Sold as “trusted AI infrastructure for nations,” it’s marketed with the language of partnership, localization, and shared innovation.

- The reality: every layer is controlled by U.S. firms—Oracle owns the cloud, NVIDIA controls the chips and drivers, OpenAI governs the models, and the U.S. government holds the legal override through the CLOUD Act and export restrictions.

- Local deployments are a mirage: there’s no right to audit, no right to fork, no escape from the legal and operational perimeter set in Washington.

Primary Source Excerpt:

"All services provided under this agreement are subject to United States export control laws and regulations. The provider reserves the right to terminate access, modify service, or revoke licenses in compliance with such regulations." — Standard SaaS/Cloud T&Cs, 2024

Stargate is not a partnership. It is a velvet-wrapped cage—once you enter, there is no exit.

Technological Sovereignty Vs. Digital Colonialism

Marietje Schaake, the author of The Tech Coup, in Beware America’s AI colonialism 2025-08-20, issues a timely warning about the dangerous conundrum American AI poses to other states.

The choice facing world leaders is not between US or Chinese AI dominance but between technological sovereignty and digital colonialism. Each trade confrontation should teach potential partners that today’s commercial relationships can become tomorrow’s coercive leverage.

Some key quotes are as follows:

President Donald Trump’s trade wars are teaching the world a harsh lesson: dependencies get weaponised. In the White House’s view, international trade is zero-sum. With his AI Action Plan promising “unchallenged” technological dominance a further ambition is clear. Will the rest of the world recognise that embracing US artificial intelligence offers Trump an even more potent tool for coercion?

More than previous technologies, AI systems create uniquely vulnerable dependencies. Algorithms are not transparent and can be manipulated to bias outputs — whether challenging antitrust rules or supporting protectionism. With a significant set of US tech chief executives pledging allegiance to this administration, the synergy between political and corporate agendas is clear. AI companies have even deployed team members in the US armed forces.

The weaponisation possibilities are extensive. Take the Cloud Act, which forces the disclosure of foreign data by domestic cloud providers, whose services dominate worldwide.

It is easy to see how tech can become an even greater bargaining chip in US foreign policy. As with steel or pharmaceuticals, Trump’s White House can simply impose a tariff on AI services or critical elements of the supply chain. The administration is already pushing the EU to weaken its Digital Services Act and considered leveraging tariffs to force a change to the UK’s online safety laws earlier this year.

The Trump administration frames the AI race as a competition between democratic and authoritarian models. Yet this obscures a troubling reality: the gap between US and Chinese approaches to technological control is narrowing. Governance grows more authoritarian by the day in Trump’s America, with political interventions reaching individual company levels.

Two Recent violations

1: Microsoft admits it 'cannot guarantee' data sovereignty 2025-07-25

Microsoft says it "cannot guarantee" data sovereignty to customers in France – and by implication the wider European Union – should the Trump administration demand access to customer information held on its servers.

2: The US is sanctioning judges from the ICC

The International Criminal Court (ICC) deplores the additional designations for sanctions which were announced today by the United States of four judges of the Court: Second Vice-President Reine Adelaide Sophie Alapini Gansou (Benin), Judge Solomy Balungi Bossa (Uganda), Judge Luz del Carmen Ibáñez Carranza (Peru) and Judge Beti Hohler (Slovenia). These additional designations follow the earlier designation of Prosecutor Karim A.A. Khan KC.

These measures are a clear attempt to undermine the independence of an international judicial institution which operates under the mandate from 125 States Parties from all corners of the globe. The ICC provides justice and hope to millions of victims of unimaginable atrocities, in strict adherence to the Rome Statute, and maintains the highest standards in protecting the rights of suspects and the victims.

For more examples of violations to natural, corporate and governmental entities see Digital Sovereignty Revoked: Infrastructure as a Weapon in Big AI, Big Tech, and the U.S. Government Are Afraid of Digital Sovereignty—Very Afraid, Dion Wiggins, 2025-08-27.

More Examples

Digital Sovereignty Revoked: Infrastructure as a Weapon

The June 5, 2025 sanctions on four ICC judges marked the moment the mask slipped. Under Executive Order 14203, U.S. companies were barred from providing “services”—including cloud, email, authentication, and collaboration platforms. It wasn’t a cyberattack or breach. It was lawful revocation, silently executed by vendors like Microsoft and Google under compulsion. A global tribunal risked being digitally locked out for doing its job. The message was clear: infrastructure is no longer neutral. It is jurisdictional, weaponized, and conditional on foreign alignment.

The ICC wasn’t the first warning. Türkiye nearly lost its entire education system in 2024 when Microsoft threatened to pull licenses for 18 million students—triggered not by malice, but by an automated program retirement. In April 2025, Sun Yat-sen University in China lost its Microsoft 365 environment in 48 hours due to U.S. export control spillover—treating a research campus like a sanctioned arms dealer. In Europe, Telekom Deutschland was forced into a legal trap: violate EU law by obeying U.S. sanctions, or lose access to U.S. markets. In each case, sovereignty was less about systems than about who writes the rules—and who can flip the switch.

The same fragility extends to individuals. A Windows 11 user lost 30 years of personal data when BitLocker keys were trapped inside a suspended OneDrive account. A San Francisco father was permanently locked out of Gmail, business records, and his child’s medical files after Google’s AI flagged a telemedicine photo. Even my own Blogger account was erased for replying “too quickly,” with access to Gmail, Drive, and identity services revoked instantly. These aren’t anomalies. They are systemic failures showing how fragile digital life becomes when authentication, storage, and identity are fused under foreign terms of service.

The pattern is consistent: one license email in Türkiye, one compliance notice in Guangzhou, one sanctions designation in The Hague, one AI flag in San Francisco—and entire systems collapse. This isn’t about cloud strategy or cost efficiency. It’s about governance, leverage, and survival. If your infrastructure is subject to someone else’s law, you don’t own your systems. You lease them. And leases end the moment you become politically inconvenient.

In the age of digital bloc lock-in, neutrality will be treated as compliance — and compliance as surrender.

The question for every government, university, enterprise, and citizen is brutally simple: if your cloud provider revoked access tomorrow, could you still function? If the answer is no, you are not sovereign—you’re operational on borrowed terms. For deeper analysis and real-world case studies, see my companion reports: Sovereignty Revoked: One Email, One Algorithm, and Everything Dies and Digital Sovereignty Revoked: How Cloud Infrastructure Became a Weapon of Foreign Policy.

USA PATRIOT Act & US Gov Access

USA PATRIOT Act vs SecNumCloud: Which Model for the Future?

The USA PATRIOT Act was passed in 2001 after the September 11 attacks to expand government agencies' powers in surveillance and counterterrorism. In practice, it grants U.S. authorities broad surveillance capabilities, allowing access to data from companies under American jurisdiction, regardless of where it is stored.

Increase in trans-Atlantic mistrust after the Snowden revelations in 2013 of US mass surveillance programs. Read Permanent Record by Edward Snowden, 2019-09-17 for details.

The adoption of the US CLOUD Act in 2018 further strengthened this authority. It requires American companies to provide data upon request, even if the data is stored on servers located in Europe.

The extraterritorial nature of these laws forces American companies to hand over data to U.S. authorities, including data stored in Europe. This creates a direct conflict with the GDPR. For European businesses using American cloud services, it opens the door to potential surveillance of their strategic and sensitive data.

Beyond confidentiality concerns, this situation raises a real challenge to digital sovereignty, as it questions Europe’s ability to manage its own data independently and securely.

SecNumCloud for Digital Sovereignty

USA PATRIOT Act vs SecNumCloud: Which Model for the Future?

In response to these challenges, France developed SecNumCloud, a cybersecurity certification issued by ANSSI (the National Cybersecurity Agency in France). It ensures that cloud providers adhere to strict security and data sovereignty standards.

SecNumCloud-certified providers must meet strict requirements to safeguard data integrity and sovereignty against foreign interference. First, cloud infrastructure and operations must remain entirely under European control, ensuring no external influence — particularly from the United States or other third countries — can be exerted.

Additionally, no American company can hold a stake or exert decision-making power over data management, preventing any legal obligation to transfer data to foreign authorities under the CLOUD Act.

Just as importantly, clients retain full control over access to their data. They are guaranteed that their data cannot be used or transferred without their explicit consent.

With these measures, SecNumCloud prevents foreign interference and ensures a sovereign cloud under European control, fully compliant with the GDPR. This allows European businesses and institutions to store and process their data securely, without the risk of being subject to extraterritorial laws like the CLOUD Act.

SecNumCloud ensures strengthened digital sovereignty by keeping data under exclusive European jurisdiction, shielding it from extraterritorial laws like the CLOUD Act. This certification is essential for strategic sectors such as public services, healthcare, defense, and Operators of Vital Importance (OIVs), thanks to its compliance with the GDPR and European regulations.

ANSSI designed SecNumCloud as a sovereign response to the CLOUD Act. Today, several French cloud providers, including Outscale, OVHcloud, and S3NS, have adopted this certification.

SecNumCloud could serve as a blueprint for the EUCS (European Cybersecurity Certification Scheme for Cloud Services), which seeks to create a unified European standard for a sovereign and secure cloud.

US-owned "Sovereign EU Clouds"

- Google carves out cloudy safe spaces for nations nervous about America's reach

- AWS forms EU-based cloud unit as customers fret about Trump 2.0, 2025-06-03

Regardless of Amazon's data sovereignty pledge, the parent company remains under American ownership, and may still be subject to the Cloud Act, which requires US companies to turn over data to law enforcement authorities with the proper warrants, no matter where that data is stored.

As Frank Karlitschek, CEO of Germany-based Nextcloud, told us in March: "The Cloud Act grants US authorities access to cloud data hosted by US companies. It does not matter if that data is located in the US, Europe, or anywhere else."

GPU Sovereignty in China

GPU Sovereignty Shift: How China’s “Big AI” Are Powering AI Without Nvidia, Dion Wiggins, 2025-08-19

This is a forensic deep-dive into the collapse of Nvidia’s dominance in China and the rise of a fully sovereign AI hardware-software stack that is now being exported globally. Washington’s sanctions, intended to cripple China’s AI ambitions, instead forced the world’s largest AI market to accelerate domestic innovation, flipping dependency into deterrence in less than two years. The result: Chinese players like Huawei, ByteDance, Tencent, Alibaba, Baidu, and a new generation of hardware startups have restructured their entire AI infrastructure around sovereign GPUs, breaking the U.S. chokehold on advanced compute.

Take-home: Digital Sovereignty is Anti-Dependence

Talking about digital sovereignty shouldn’t make you look like a traitor. But today, it does.

Say you want alternatives to U.S. tech? You’re accused of being anti-American.

Mention China’s progress in AI? You’re branded pro-authoritarian.

Advocate for local infrastructure? Now you’re undermining allies.

This framing is toxic. And it’s designed to shut down the one conversation that actually matters:

Who owns your nations or organization's digital future?

This article dismantles the trap we’ve all been forced into.

We need to be able to talk openly—about the risks, the challenges, and the benefits of digital sovereignty—without being labeled anti-American, pro-China, or anything else.

Because digital sovereignty isn’t about picking sides. It’s about having the right to choose your own system—without permission, without gatekeepers, and without apology.

Let us actually peruse through this article by Dion Wiggins now!

Deep Dive Yourself

- Rewiring Democracy: Exposing the Tech Coup with Marietje Schaake ~1h

- The Tech Coup: How to Save Democracy from Silicon Valley ~1.5h

- Read: Beware America’s AI colonialism 2025-08-20;

- Read (optional): The Tech Coup

- Why Countries Must Fight For Digital Sovereignty, 2025-08-21 -1h

- Non-exhuastive deep dives into digital sovereignty:

- The CLOUD Act and Transatlantic Trust, 2023-03-29

- Euro techies call for sovereign fund to escape Uncle Sam's digital death grip, 2025-03-17

- Digital Sovereignty is far more than compliance, 2025-07-29

- 'Kill Switch Shield' and the Recurring Erosion of Trust in US Tech

- Policy & Internet: Volume 16, Issue 4, 2025-01-28

- GPU Sovereignty Shift: How China’s “Big AI” Are Powering AI Without Nvidia, Dion Wiggins, 2025-08-19

- No, Digital Sovereignty Doesn’t Mean You’re Anti-America or Pro-China: It’s Anti-Dependence, Dion Wiggins, 2025-05-28

- Big AI, Big Tech, and the U.S. Government Are Afraid of Digital Sovereignty—Very Afraid, Dion Wiggins, 2025-08-27

Analysis of Algorithms & Operations

Putting it all together...

DPRAMJEM: Math+Law

DPRAMJEM := Distributed Parallel Random Access Machine Jurisdiction-Explicit Model

- Asymptotic analysis of efficiency of algorithms under different AMMs

- Abstract Machine Model (AMM)

- SRAM: Sequential Random Access Machine Model (older computers)

- PRAM: Parallel Random Access Machine Model (laptops and smart phones)

- DPRAM: Distributed Parallel Random Access Machine Model (cluster of computers or cloud)

- SRAM PRAM and DPRAM models with 1, 2, ..., p, p+1, ... processors and a memory model

- Work-Depth Model and Brent's Theorem

- Example + : Addition of n numbers in SRAM, PRAM and DPRAM models

- we will revisit this example implemented using ray.io in a memory model

- Legal analysis of sovereignty of operations under Jurisdiction-Explicit Model (JEM) extend AMMs beyond analysis of algorithms

- JEM-local-Sverige is our default model when we work in Sweden (Sverige) with sovereign infrasturcture in Sweden

- Example: own laptops (default for this workshop), workstations, or own on-premise clouds (NuC cluster) under sovereignty of Sverige

- many clouds in a sky: "sky computing" means your service is agnostic to a set of clouds

- Example: databricks offers sky computing across some clouds: AWS, GCP and Azure

- JEM-sky-State extends DPRAM by specifying the Nation State of the cloud-provider's HQ, i.e., the State that can enforce contracts and regulate each cloud in the sky.

- Examples of JEM-sky-State, a set of cloud providers under the jurisdiction of a State:

- JEM-sky-USA includes AWS, GCP, Azure, etc.

- JEM-sky-China includes Alibaba, Tencent, Huawei, etc.

- JEM-sky-EU includes scaleway, OVHcloud, Exoscale, Elastx, etc.

- JEM-sky-Sverige includes Elastx, bahnhof, etc.

- DPRAMJEM-sky-State = Distributed Parallel Random Access Machine Jurisdiction-Explicit Model is the "Math+Law" model required for sovereign operations in the sky of a said State

- i.e., to enforce contracts or prohibit their violations or regulate possible contracts through coercive powers of the said State and not by another State.

- JEM-local-Sverige is our default model when we work in Sweden (Sverige) with sovereign infrasturcture in Sweden

- Break-time! ... AoAO... AoAO ahhhaaaa!

Practical Modules -- Overview

- [1 hour] docker, command-line, git, ray labs

- [1 hour] ray labs with YouTrys

- [last 30 minutes] summer school survey and workshop summary & discussions

We will switch to the book and jupyter notebooks for this part.

Prepare Your Laptop

- You will be learning the practical modules on your own laptops.

- Prepare your laptop with the following three tasks well before the workshop.

Task 1: Update System

- Make sure your laptop is up to date and secure. You can do this by updating your system software on Mac, Windows or Linux as follows:

Task 2: Install Docker

- Install Docker on your laptop by following these intructions for your laptop:

- Docker Desktop is available for Mac, Linux and Windows

- Alternatively, On Linux Install Docker Engine

Task 3: Check Docker Works

- Check that your docker installation was successful

-

If Docker Desktop is installed, make sure that it is launched and running.

-

Open your CLI terminal and start a container by running the command:

docker run hello-world- You should receive a

Hello from Docker!in the output.

- You should receive a

-

Run

welcome-to-dockeras daemon mapping port 80 to 8080.docker run -d -p 8080:80 docker/welcome-to-docker- Visit http://localhost:8080 in your browser to access this container and see a welcome message.

- Identify and Stop the running

welcome-to-dockercontainer.

-

Task 4: Pull workshop docker images

-

We will use the workshop's latest docker images built for the two main processor architectures.

-

First you need to find out which type of processor your laptop has (do an online search if you don't know how).

-

You can simply pull these docker images as follows:

-

For most modern Mac laptops with silicon M1/M2/etc. on ARM 64 bit processors:

docker pull vakeworks/sovscadesdismalops:dev-arm64 -

For most Windows, Linux and older Mac laptops with Intel x86/AMD 64 bit processors:

docker pull vakeworks/sovscadesdismalops:dev-amd64

-

-

You should be able to open terminal inside a running docker container

-

[OPTIONAL] You can checkout command-line and docker pages we will be going through.

-

[OPTIONAL] Advanced Exercise: You can build these docker images from scratch on your own by following Docker Ray Dev.

Set Up, Labs & Objectives

Set Up Laptop

Let us set up our laptop for the practical modules.

Starting Docker Container

- Make sure you are in a directory in the host machine that you want to work from as this directory will be mounted inside the docker container.

- We will make a directory named

workshopand launch docker container from there.-

Windows, Mac and Linux:

mkdir workshop -

and change into the directory

cd workshop -

Your current directory should be

workshopbefore you launch the next few commands.pwd

-

Warning

Warning

If you are in your home directory or another in your laptop (host) then that directory will get mounted inside the docker container as /home/ray/workshop

-

For most modern mac laptops with silicon M1/M2/etc. on ARM 64 bit processors:

docker run --shm-size=3g -d -t -i -v ${PWD}:/home/ray/workshop -p8888:8888 -p6379:6379 -p10001:10001 -p8265:8265 --name=sovscadesdismalops vakeworks/sovscadesdismalops:dev-arm64 -

For most windows, linux and older mac laptops with INTEL x86/amd 64 bit processors:

docker run --shm-size=3g -d -t -i -v ${PWD}:/home/ray/workshop -p8888:8888 -p6379:6379 -p10001:10001 -p8265:8265 --name=sovscadesdismalops vakeworks/sovscadesdismalops:dev-amd64 -

Let's understand the above command quickly (deep dive at docker).

-

See that the container is running and note its id and name.

docker ps -

You should see something like this:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES12dfbd942909 vakeworks/sovscadesdismalops:dev-amd64 "/bin/bash" 16 minutes ago Up 16 minutes 0.0.0.0:6379->6379/tcp, [::]:6379->6379/tcp, 0.0.0.0:8265->8265/tcp, [::]:8265->8265/tcp, 0.0.0.0:8888->8888/tcp, [::]:8888->8888/tcp, 0.0.0.0:10001->10001/tcp, [::]:10001->10001/tcp sovscadesdismalops -

Also, try:

docker stop sovscadesdismalops,docker start sovscadesdismalops,docker kill sovscadesdismalops,...,docker rm <CONTAINER ID>etc.

Get into the container

We can get into the container and use git to download the latest notebooks for the workshop.

docker exec -i -t sovscadesdismalops /bin/bash

This would get you into the sovscadesdismalops docker container.

(base) ray@12dfbd942909:~$

Now, you can try the following commands inside the container.

Copy the following command and paste it into the container or just type it in:

This should show the following output:

-

print working directory using

pwd, like so:pwd(base) ray@12dfbd942909:~$ pwd/home/ray -

list the files and direcories using

lscommands.lsanaconda3 learn pip-freeze.txt requirements_compiled.txt workshop -

list the files and direcories in

workshopdirectoryls workshop- This will show (unless you mounted a directory with files and folders already in it):

(base) ray@12dfbd942909:~$

- This will show (unless you mounted a directory with files and folders already in it):

Obtain lab materials

Now let us change directory into workshop and use git to download some notebooks for the workshop:

cd workshop

git clone --no-checkout --depth=1 --filter=tree:0 https://github.com/VakeWorks/SovScaDesDisMaLOps.git

cd SovScaDesDisMaLOps/

git sparse-checkout set --no-cone /labs

git checkout

Now you can use the following commands to see where you are and what has been downloaded with git:

pwdandls -R labs

It will look something like this with the respective LICENSEs:

output

output

(base) ray@12dfbd942909:/home/ray/workshop/SovScaDesDisMaLOps$ pwd

/home/ray/workshop/SovScaDesDisMaLOps

(base) ray@12dfbd942909:/home/ray/workshop/SovScaDesDisMaLOps$ ls -R labs/

labs/:

learning_ray my_script.py nishihara_blogpost ray scalingpythonml

labs/learning_ray:

LICENSE README.md notebooks

labs/learning_ray/notebooks:

ch_01_overview.ipynb ch_02_ray_core.ipynb ch_03_core_app.ipynb images

labs/learning_ray/notebooks/images:

chapter_01 chapter_02 chapter_03

labs/learning_ray/notebooks/images/chapter_01:

AIR.png cartpole.png ray_layers.png simple_cluster.png

Ecosystem.png ds_workflow.png ray_layers_old.png

labs/learning_ray/notebooks/images/chapter_02:

architecture.png map_reduce.png task_dependency.png worker_node.png

labs/learning_ray/notebooks/images/chapter_03:

train_policy.png

labs/nishihara_blogpost:

aggregation_example.ipynb images

labs/nishihara_blogpost/images:

DepGraphAdd1to8_RobertNishihara.webp

labs/ray:

LICENSE README.md doc

labs/ray/doc:

source

labs/ray/doc/source:

ray-core

labs/ray/doc/source/ray-core:

examples

labs/ray/doc/source/ray-core/examples:

gentle_walkthrough.ipynb images

labs/ray/doc/source/ray-core/examples/images:

task_dependency.png

labs/scalingpythonml:

README.md

To check out a fresh and latest copy on August 29 2025 after lunch for the labs you can delete the older labs according to commands in the Warning and repeat the above steps in Obtain lab materials. It is best to wait to do the Set Up together as the notebooks are being prepared and tested. Otherwise you can use git and resolve conflicts if any.

Next Steps for Labs

- Let us do a show of hands now to gauge the distribution of current experiences and skills

- How many of you are (1) already familiar, (2) somewhat familiar, (3) not at all familiar with:

- command-line interface to interact with machines?

- git for distributed version control?

- docker containers?

- ray?

- let us assign a triangle, a square or a circle to each of you based on your responses.

- Traingles are those who have more familiarity with at least two of the above four items.

- Squares are those who have some familiarity with at least one the above four items.

- Circles are those who least familiarity with most of the above four items.

- GOAL: When you do

YouTrysin the labs on your own own make sure you are in a group of 3-4 students with at least one Triangle or Square. Ideally, each group should not have only Circles.

- How many of you are (1) already familiar, (2) somewhat familiar, (3) not at all familiar with:

- Operating System (OS) show of hands. How many of you have one of the following OSs?

- Linux and other Unix-like operating systems?

- Have Linux laptop?

- Have Windows laptop?

- Have Mac laptop?

- Have Harmony OS laptop?

- We will glance next over Command-Line Interface, Docker and Git to motivate and let those with less experience deep-dive later.

- We will quickly demonstrate how to get our fingers on the keys and work on the labs by directly using the minimal required steps to get to our main destination that is Ray, an open source project for parallel and distributed Python.

- You are expected to use your laptops to follow along in real-time.

- If you get stuck, don't worry as there will be exercises or YouTrys to get you try on your own in a small group with perhaps others who can help.

- Raaz and Alfred will be moving around the small groups during YouTrys.

- If you get stuck, don't worry as there will be exercises or YouTrys to get you try on your own in a small group with perhaps others who can help.

Objective of the Labs and Bigger Picture

- The objective of the labs is to help you take the first steps in this highly integrative field of sovereign scalable data engineering sciences and distributed machine learning operations.

- It is also to perhaps inspire you to consider taking the elective 6 hp WASP PhD course entirely with Raaz in a more focused and structured mathematical and coding setting over a whole semester in the Fall of 2026 titled Scalable Data Science and Distributed Machine Learning (ScaDaMaLe) where you will do group projects with others.

- Checkout the past student group project presentations reachable from the above link, where peer-reviewed executible books were written in 2020, 2022 and 2024 instances of the course with the corresponding oral presentations in the following links curated by year as follows:

Command-Line Interface

In the beginning, was the command-line...

$ toilet command-line

# ""# "

mmm mmm mmmmm mmmmm mmm m mm mmm# # mmm m mm mmm

#" " #" "# # # # # # # " # #" # #" "# # # #" # #" #

# # # # # # # # # m"""# # # # # """ # # # # #""""

"#mm" "#m#" # # # # # # "mm"# # # "#m## "mm mm#mm # # "#mm"

- A command-line interface (CLI) is a means of interacting with software via commands

- each formatted as a line of text

- CLIs emerged in the mid-1960s, on computer terminals, as an interactive alternative to punched cards

- Alternatives to a CLI include a GUI (Graphical User Interface) but GUI is not conducive to automating programs

- CLI and GUI are interfaces around the same thing and can be used interchangeably

- except that CLI is much faster and reliable when managing a cluster or server farm

- Resources for deeper dives:

- READ: https://en.wikipedia.org/wiki/Command-line_interface

- YouTry Shells in Action at: freecodecamp

Let's Try Together Now!

Let's try the following commands on our command-line interface (CLI), also known as shell or terminal now.

The commands are adapted from Wallace Preston's Ultimate Terminal Cheatsheet - Your Key to Command Line Productivity.

Bookmark https://cheatsheetindex.com/ for many more tools.

Master the Terminal and Rule the Command Line Kingdom!

Welcome to the Ultimate Terminal Cheatsheet! We know the command line can be intimidating, like trying to navigate a maze blindfolded. But fear not, for this cheatsheet will be your trusty guide, like a GPS for your terminal. From manipulating files to networking to version control, we've got you covered. Let's power up our keyboards and get ready to conquer the command line!

Basic Terminal Commands

- Command line

- See where I am

pwd - list files

ls - list hidden files

ls -a - change directory

cd <directory-name> - Open whole directory in VS Code

code . - shorthand for current and previous folder

ls .lists current folderls ..lists previous foldercd ..moves up a folder

- home vs root

~is short for homecd ~brings you home/is short for rootcd /brings to you root

- absolute vs relative paths

- Absolute:

touch /folder-name/file-name.txtgoes to the root folder, intofolder-name, makes a file inside of that folder - Relative:

touch ./folder-name/file-name.txtgoes intofolder-namefrom current level, makes a file inside of that folder touch ~/folder-name/file-name.txtgoes into your home directory (usually looks like/home/username)

- Absolute:

- Files

- create files

touch file-name.txt - remove a file

rm file-name.txt - rename a file

mv file-name.txt new-file-name.txt - move a file

mv this.txt target-folder/

- create files

- Folders

- create folders

mkdir folder-name - remove a folder

rm folder-name -R - rename a folder

mv folder-name new-folder-name - move a folder

mv folder-name ../../target-folder

- create folders

grep- search recursively for a pattern in a directory:

grep -r <pattern> <directory> - search for a pattern in a file:

grep <pattern> <file> - search for a pattern in multiple files:

grep <pattern> <file1> <file2> <file3> - ignore case when searching:

grep -i <pattern> <file> - count the number of occurrences of a pattern:

grep -c <pattern> <file> - print the line number for each match:

grep -n <pattern> <file> - print the lines before or after the matching line:

grep -A <num_lines> <pattern> <file>orgrep -B <num_lines> <pattern> <file> - print the matching line and a few lines before and after it:

grep -C <num_lines> <pattern> <file>

- search recursively for a pattern in a directory:

- See where I am

ports/processes

- Show what is running on a port

lsof -i:<port-number> - Kill a process

kill <process-id> - Example of above two steps

$ lsof -i:3000

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

node 78070 myuser 24u IPv6 0xdc9d8g07c355e5f 0t0 TCP *:hbci (LISTEN)

$ kill 78070

- Show list of all node processes

ps -ef | grep node - Kill all node processes

pkill -f node

VIM

- Insert mode:

i - Normal mode:

Esc - Write changes to file:

:w - Quit VIM:

:q - Save changes and quit VIM:

:wq - Undo last change:

u - Redo last undone change:

Ctrl + r

Git

- Cloning and remotes

- Clone

git clone <url> - Check where cloning from and committing to

git remote -v - Add a remote repository

git remote add <remote-name> https://remote-url/repo-name.git - Change the origin

git remote set-url origin <new-git-repo-url>

- Clone

- Committing

- See status of staging area etc

git status - Add all files in the repo to staging area

git add -A - Add just one file to the staging area

git add <filename> - Commit

git commit -m "my-message" - Push

git push

- See status of staging area etc

- Branching

- Check out to a new branch

git checkout -b <branchname> - Check out to an existing branch

git checkout <branchname> - Delete a branch (cannot be the branch you're on!)

git branch -D <branchname> - Merge another branch into current branch

- Move to the branch you want to merge FROM

git checkout dev - Pull down most recent remote version

git pull - Move to the branch you want to merge TO

git checkout feature-branch-#25 - Merge the FROM branch (

devin this case) into the current branchgit merge dev

- Move to the branch you want to merge FROM

- Check out to a new branch

- Logs

- See log of activity (commits)

git log - See log with limited info (easier to read)

git log --oneline

- See log of activity (commits)

- Configs

- Change git email locally:

git config user.name "Marley McTesterson" - Change git email locally:

git config user.email example@email.com - See git repo config settings (i.e. email username etc):

git config --list

- Change git email locally:

- Diffs

- See what is changed currently compared to the most recent commit

git diff - See what has changed in the current working tree vs a specific branch

git diff <branch-name> -- - Show the difference between two branches:

git diff <branch1> <branch2>

- See what is changed currently compared to the most recent commit

- Removing/rebasing changes - Intermediate to Advanced

- Auto-stash changes before switching branches:

git checkout <branch> --autostash - Remove uncommitted changes (but save them for later, just in case)

git stash - Remove last commit

git reset --hard HEAD^ - Squash the last 3 commits into one single commit

git rebase -i HEAD~3- Then in the editor, for any commits you want to keep leave

pick. For anything you want to "squash" and not include that as a commit, changepicktosquash.

- Then in the editor, for any commits you want to keep leave

- Squash everything after

git rebase -i e25340bwhere e25340b is the SHA1- Repeat next step from above to pick/squash specific commits.

- Auto-stash changes before switching branches:

cURL

- Methods

GETrequest:curl https://www.google.com/POSTrequest:curl http://localhost:3000/myroute -H "Content-Type: application/json" -X POST -d '{"key1": "val1", "key2": "val2"}'(-His a header, in this case setting our content type as json.-Xis setting the method, post in this case.-dis the request body in JSON format.)PATCHrequest:curl http://localhost:3000/myroute -X PATCH -H 'Content-Type: application/json' -d '{"key1": "val1", "key2": "val2"}'DELETErequest:curl http://localhost:3000/myrouote -X DELETE

- Setting Authorization header:

curl http://localhost:3000/myroute -H 'Authorization: Bearer <myreallylongtoken>' - Basic Auth: Authenticate with a username and password while accessing a URL:

curl -u <username>:<password> <url> - Send a POST request with data from a file:

curl -X POST -d @<filename> <url> - Fetch only the headers of a URL:

curl -I <url> - Download the contents of a URL and save it to a file:

curl -o <output_file> <url>

Serverless Framework

- Create a new serverless project:

serverless create --template <template-name> - Deploy a serverless project:

serverless deploy - Remove a deployed serverless project:

serverless remove

Docker

- List all running containers:

docker ps - Stop a container:

docker stop <container-id> - Remove a container:

docker rm <container-id> - List all available images:

docker images - Remove an image:

docker rmi <image-id>

macOS

- Show hidden files in Finder:

Shift + Command + . - Open a file or folder in Finder from Terminal:

open <file/folder> - Show all running processes:

ps aux - Kill a process:

kill <pid> - Restart the macOS Dock:

killall Dock

Linux

- Update package manager:

sudo apt-get update - Install a package:

sudo apt-get install <package-name> - Remove a package:

sudo apt-get remove <package-name> - Show all running processes:

ps aux - Kill a process:

kill <pid>

Windows

- We can fall back on Linux in this case as we will be running docker.

Docker

What is Docker?

Docker provides the ability to package and run an application in a loosely isolated environment called a container. The isolation and security allows you to run many containers simultaneously on a given host. Containers are lightweight and contain everything needed to run the application, so you do not need to rely on what is currently installed on the host. You can easily share containers while you work, and be sure that everyone you share with gets the same container that works in the same way.

Download CLI Cheat Sheet that we will go through next.

DOCKER INSTALLATION

Docker Desktop is available for Mac, Linux and Windows

- https://docs.docker.com/desktop

- https://docs.docker.com/get-started/introduction/get-docker-desktop/

View example projects that use Docker

Check out our docs for information on using Docker

Alternatively, On Linux Install Docker Engine

DOCKER IMAGES

Docker images are a lightweight, standalone, executable package of software that includes everything needed to run an application: code, runtime, system tools, system libraries and settings.

# Build an Image from a Dockerfile without the cache

docker build -t <image_name> . –no-cache

# List local images

docker images

# Delete an Image

docker rmi <image_name>

# Remove all unused images

docker image prune

DOCKER HUB

Docker Hub is a service provided by Docker for finding and sharing container images with your team. Learn more and find images at https://hub.docker.com

# Login into Docker

docker login -u <username>

# Publish an image to Docker Hub

docker push <username>/<image_name>

# Search Hub for an image

docker search <image_name>

# Pull an image from a Docker Hub

docker pull <image_name>

DOCKER GENERAL COMMANDS

# Start the docker daemon

docker -d

# Get help with Docker. Can also use –help on all subcommands

docker --help

# Display system-wide information

docker info

DOCKER CONTAINERS

A container is a runtime instance of a docker image. A container will always run the same, regardless of the infrastructure. Containers isolate software from its environment and ensure that it works uniformly despite differences for instance between development and staging

# Create and run a container from an image, with a custom name:

docker run --name <container_name> <image_name>

# Run a container with and publish a container’s port(s) to the host.

docker run -p <host_port>:<container_port> <image_name>

# Run a container in the background

docker run -d <image_name>

# Start or stop an existing container:

docker start|stop <container_name> (or <container-id>)

# Remove a stopped container:

docker rm <container_name>

# Open a shell inside a running container:

docker exec -it <container_name> sh

# Fetch and follow the logs of a container:

docker logs -f <container_name>

# To inspect a running container:

docker inspect <container_name> (or <container_id>)

# To list currently running containers:

docker ps

# List all docker containers (running and stopped):

docker ps --all

# View resource usage stats

docker container stats

Try Some Docker Commands

- Open your CLI terminal and start a container by running the command:

docker run hello-world

-

You should receive a

Hello from Docker!in the output. -

Run

welcome-to-dockeras daemon mapping port 80 to 8080.

docker run -d -p 8080:80 docker/welcome-to-docker

- Visit http://localhost:8080 in your browser to access this container.

- Identify and Stop the running

welcome-to-dockercontainer.

# view available docker images

docker image ls

# view running docker container processes

docker ps

# stop a running container by its CONTAINER ID or NAMES

docker stop <CONTAINER ID>

Git

What is Git and why use it?

- Git is an open-source distributed version control system (DVCS) that allows developers to track and manage changes to their codebase.

- Git was developed by Linus Torvalds in 2005 for Linux kernel development.

- Many "free" hosted git providers exist: GitHub, GitLab, Bitbucket, etc.

- It's easy to run your own git server, in addition to at least two hosted git providers, to ensure digital sovereignty.

- Sources for Deep Dives:

Git for Digital Sovereignty

- The following article and letter by Dion Wiggins ring sovereign alarms:

- How to use Git for Sovereign Distributed Version Control?

- Do not be dependent on a single provider for git services who may be under the coercive powers of another State.

- Set up your own private git server

- Set up your own personal or corporate gitlab server

Ray

- Ray core walkthrough

- functions -> tasks

- classes -> actors

- basics of ray design

- Reinforcement learning from ray core scratch

- pointers to libraries and ecosystem

Working with Ray Cluster

Let us get into the docker container and into the /home/ray/workshop/SovScaDesDisMaLOps/labs directory as we did at the end of Set Up.

We will do the following:

- start a ray cluster

- check its dashboard on the browser

- submit a python script and get its output

- check the status of the cluster

- finally stop the ray cluster

ray start --head --dashboard-host 0.0.0.0

shm-size WARNING

shm-size WARNING

WARNING: The object store is using /tmp instead of /dev/shm because /dev/shm has only 3221225472 bytes available. This will harm performance! You may be able to free up space by deleting files in /dev/shm. If you are inside a Docker container, you can increase /dev/shm size by passing '--shm-size=9.98gb' to 'docker run' (or add it to the run_options list in a Ray cluster config). Make sure to set this to more than 30% of available RAM.

It might be best to get out of docker and restart it with the suggested '--shm-size=9.98gb' as we started with '--shm-size=3gb' to be conservative.

You should getting an output similar but no exactly the same as the following:

output

output

--------------------

Ray runtime started.

--------------------

Next steps

To add another node to this Ray cluster, run

ray start --address='172.17.0.2:6379'

To connect to this Ray cluster:

import ray

ray.init()

To submit a Ray job using the Ray Jobs CLI:

RAY_ADDRESS='http://172.17.0.2:8265' ray job submit --working-dir . -- python my_script.py

See https://docs.ray.io/en/latest/cluster/running-applications/job-submission/index.html

for more information on submitting Ray jobs to the Ray cluster.

To terminate the Ray runtime, run

ray stop

To view the status of the cluster, use

ray status

To monitor and debug Ray, view the dashboard at

172.17.0.2:8265

If connection to the dashboard fails, check your firewall settings and network configuration.

We should be able to find the dashboard for the ray cluster at http://172.17.0.2:8265/ or http://localhost:8265/.

There is a file named my_script.py in the labs directory with the following contents:

import ray

runtime_env = {"pip": ["emoji"]}

ray.init(runtime_env=runtime_env)

@ray.remote

def f():

import emoji

return emoji.emojize('Python is :thumbs_up:')

print(ray.get(f.remote()))

Let us make sure that we can pass this python script to the ray cluster and get the desired output.

RAY_ADDRESS='http://172.17.0.2:8265' ray job submit --working-dir . -- python my_script.py

output

output

(base) ray@12dfbd942909:~/workshop/SovScaDesDisMaLOps/labs$ RAY_ADDRESS='http://172.17.0.2:8265' ray job submit --working-dir . -- python my_script.py

Job submission server address: http://172.17.0.2:8265

2025-08-27 10:16:56,952 INFO dashboard_sdk.py:338 -- Uploading package gcs://_ray_pkg_e8bdf52a9ba6994f.zip.

2025-08-27 10:16:56,952 INFO packaging.py:588 -- Creating a file package for local module '.'.

-------------------------------------------------------

Job 'raysubmit_dgEg6widN2VTcxuR' submitted successfully

-------------------------------------------------------

Next steps

Query the logs of the job:

ray job logs raysubmit_dgEg6widN2VTcxuR

Query the status of the job:

ray job status raysubmit_dgEg6widN2VTcxuR

Request the job to be stopped:

ray job stop raysubmit_dgEg6widN2VTcxuR

Tailing logs until the job exits (disable with --no-wait):

2025-08-27 10:16:57,027 INFO job_manager.py:531 -- Runtime env is setting up.

2025-08-27 10:16:59,233 INFO worker.py:1616 -- Using address 172.17.0.2:6379 set in the environment variable RAY_ADDRESS

2025-08-27 10:16:59,240 INFO worker.py:1757 -- Connecting to existing Ray cluster at address: 172.17.0.2:6379...

2025-08-27 10:16:59,255 INFO worker.py:1928 -- Connected to Ray cluster. View the dashboard at 172.17.0.2:8265

Python is 👍

------------------------------------------

Job 'raysubmit_dgEg6widN2VTcxuR' succeeded

------------------------------------------

Let us examine the status of the ray cluster we started using ray status command.

ray status

```

======== Autoscaler status: 2025-08-27 10:24:51.593334 ========

Node status

---------------------------------------------------------------

Active:

1 node_49e8ee2c48c0cf79d5bfc18b2f3bdff60b5363f68b17edaa6a1391e1

Pending:

(no pending nodes)

Recent failures:

(no failures)

Resources

---------------------------------------------------------------

Total Usage:

0.0/8.0 CPU

0B/21.16GiB memory

0B/9.07GiB object_store_memory

Total Constraints:

(no request_resources() constraints)

Total Demands:

(no resource demands)

```

Let us now stop the ray cluster.

ray stop

It will give an output like:

Stopped all 6 Ray processes.

- Now we have tested ray cluster is up and can run scripts we submit to it.

- This is effectively what is done in more complex settings with the ray cluster running in a private or public cloud

- To learn a bit faster we will use jupyter notebooks REPL environment on a browser instead of submitting scripts

Ray in Jupyter Lab

Next we can start jupyter lab inside our docker container with no running ray clusters within it.

jupyter lab --ip 0.0.0.0 --no-browser --allow-root

output

output

$ jupyter lab --ip 0.0.0.0 --no-browser --allow-root

[I 2025-08-27 10:32:24.139 ServerApp] jupyter_lsp | extension was successfully linked.

[I 2025-08-27 10:32:24.153 ServerApp] jupyter_server_terminals | extension was successfully linked.

[I 2025-08-27 10:32:24.163 ServerApp] jupyterlab | extension was successfully linked.

[I 2025-08-27 10:32:24.165 ServerApp] Writing Jupyter server cookie secret to /home/ray/.local/share/jupyter/runtime/jupyter_cookie_secret

[I 2025-08-27 10:32:25.169 ServerApp] notebook_shim | extension was successfully linked.

[I 2025-08-27 10:32:25.326 ServerApp] notebook_shim | extension was successfully loaded.

[I 2025-08-27 10:32:25.329 ServerApp] jupyter_lsp | extension was successfully loaded.

[I 2025-08-27 10:32:25.331 ServerApp] jupyter_server_terminals | extension was successfully loaded.

[I 2025-08-27 10:32:25.342 LabApp] JupyterLab extension loaded from /home/ray/anaconda3/lib/python3.9/site-packages/jupyterlab

[I 2025-08-27 10:32:25.342 LabApp] JupyterLab application directory is /home/ray/anaconda3/share/jupyter/lab

[I 2025-08-27 10:32:25.343 LabApp] Extension Manager is 'pypi'.

[I 2025-08-27 10:32:25.647 ServerApp] jupyterlab | extension was successfully loaded.

[I 2025-08-27 10:32:25.647 ServerApp] Serving notebooks from local directory: /home/ray/workshop/SovScaDesDisMaLOps/labs

[I 2025-08-27 10:32:25.648 ServerApp] Jupyter Server 2.16.0 is running at:

[I 2025-08-27 10:32:25.648 ServerApp] http://12dfbd942909:8888/lab?token=64bc9471350814f6f13da0d250459236a8ceb36d11b31ad4

[I 2025-08-27 10:32:25.648 ServerApp] http://127.0.0.1:8888/lab?token=64bc9471350814f6f13da0d250459236a8ceb36d11b31ad4

[I 2025-08-27 10:32:25.648 ServerApp] Use Control-C to stop this server and shut down all kernels (twice to skip confirmation).

[C 2025-08-27 10:32:25.670 ServerApp]

To access the server, open this file in a browser:

file:///home/ray/.local/share/jupyter/runtime/jpserver-4116-open.html

Or copy and paste one of these URLs:

http://12dfbd942909:8888/lab?token=64bc9471350814f6f13da0d250459236a8ceb36d11b31ad4

http://127.0.0.1:8888/lab?token=64bc9471350814f6f13da0d250459236a8ceb36d11b31ad4

This should output: copy and paste one of these URLs:

Open the URL http://127.0.0.1:8888/lab?token=.... with a long token of your own. Try http://localhost:8888/lab?token=.... if the local IP address doesn't work.